With the latest improvements to AI and datasets, training LLMs is a cost effective and fast way to customize models for various needs. Fine-tuning LLMs creates various options in the early stages of modeling without wasting GPUs. Axolotl has enough knobs to cover the gamut from 1 GPU to GPU Rich, from beginner to expert level.

Fine-tuning at the earliest of stages can present more accurate results, lower hallucination rates and save valuable training time. For tips on selecting the right GPU read here.

If you are fine tuning with Llama 3.1 read more here

Simple Steps to Fine-Tuning

As an open source solution Axolotl supports multiple model frameworks like Gemma, Llama, and Mistral with resource optimization compatibility like LoRA and QLoRA and can be setup for distributed training across several GPUs at the same time.

Read from Google Cloud about Model Fine-tuning Made Easy

With budgeting costs in mind, small and well tuned models can often outperform GPT-4. The Kaitchup did a write up on using a 7B parameter model to reason using just 1,000 supervised fine-tuning samples. Nearly double the amount of businesses last year are using generative AI, up to 65% in a recent study which means there are more LLMs than ever before.

Fast LLM Setup and Fine-tuning

Being able to quickly setup, run and train models is essential in adaptive technologies as success and accuracy rates continue to improve daily.

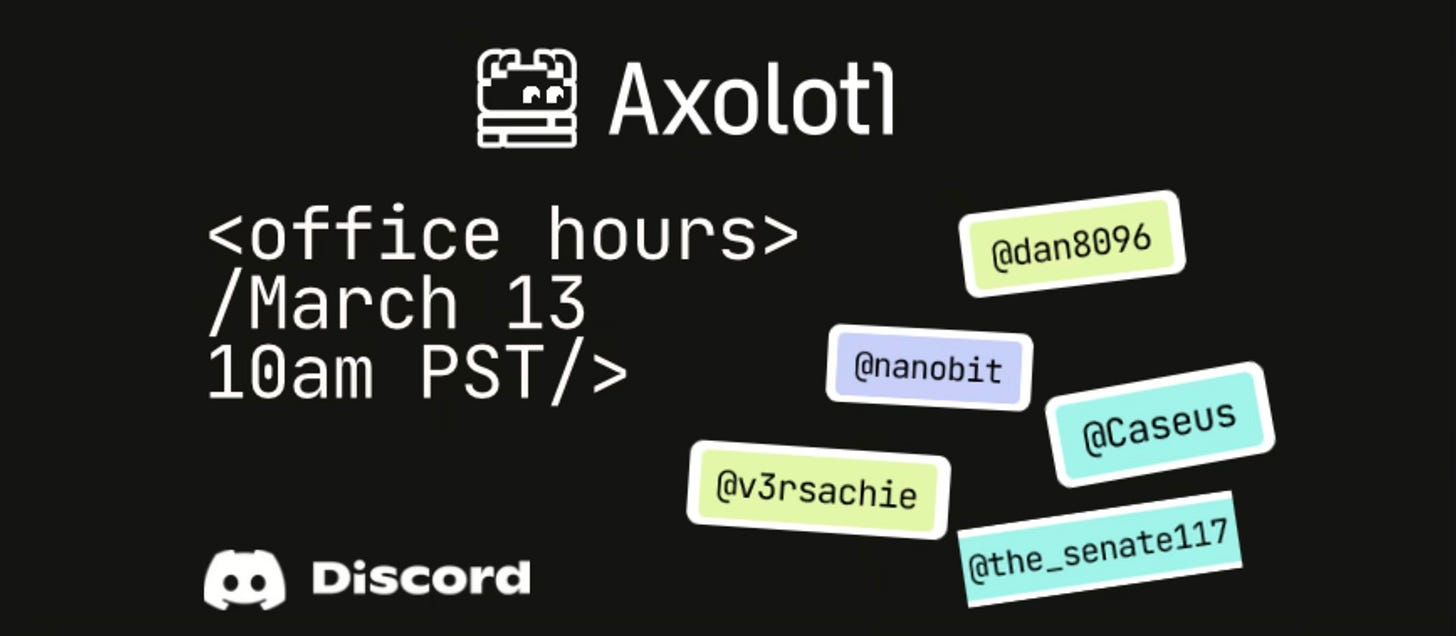

While setting up with Axolotl you can also drop into Discord for questions and help.

There are also monthly Office Hours for real-time Q and A.